Backpropagation Programmer

A Gentle Introduction to Backpropagation Shashi Sathyanarayana, Ph.D July 22, 2014 Contents. Method of programming such a computational device is to me. The Backpropagation Algorithm – Entire Network. There is a glaring problem in training a neural network using the update rule above. We don’t know what the “expected” output of any of the internal edges in the graph are.

Is a reddit for discussion and news about Guidelines • Please keep submissions on topic and of high quality. • Just because it has a computer in it doesn't make it programming. If there is no code in your link, it probably doesn't belong here. • Direct links to app demos (unrelated to programming) will be removed. • No surveys.

• Please follow proper. Info • Do you have a question?

• Do you have something funny to share with fellow programmers? Please take it to. • For posting job listings, please visit. • Check out our. It could use some updating. • Are you interested in promoting your own content? Related reddits • • • • • • • • • • • • • • •.

I am taking a class on Machine Learning at University, but there are some really good Udacity courses on the subject, specifically by Charles Isbel. I can give you a brief overview of Machine Learning though.

Neural Networks are just a part of machine learning, usually classified under supervised or unsupervised learning. This also includes things like decision trees.

Bill Phillips Eating For Life Pdf Creator. Unsupervised learning is another facet that focuses on the clustering or grouping of a set of data. Lastly, reinforcement learning is the most interesting and most promising for the future of AI. RL is all about determining which actions to take based on delayed reward (winning or losing). EDIT: correction to classification of ANN's.

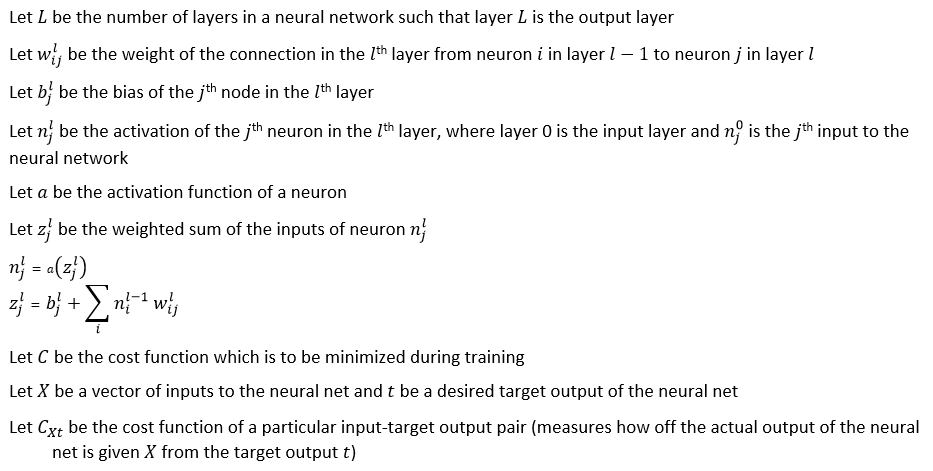

Sony Net Md Walkman Mz-n510 Software. I've recently completed Professor Ng's Machine Learning course on Coursera, and while I loved the entire course, I never really managed to understand the backpropagation algorithm for training neural networks. My problem with understanding it is, he only ever teaches the vectorised implementation of it for fully-connected feed-forward networks. My linear algebra is rusty, and I think it would be much easier to understand if somebody could teach me the general purpose algorithm. Maybe in a node-oriented fashion. I'll try and phrase the problem simply, but I may be misunderstanding how backprop works, so if this doesn't make sense, disregard it: For any given node N, given the input weights/values, the output weights/values, and the error/cost of all the nodes that N outputs to, how do I calculate the 'cost' of N and use this to update the input weights?

Let's consider a node in a back-propagation (BP) network. It has multiple inputs, and produces an output value. We want to use error-correction for training, so it will also update weights based on an error estimate for the node.

Each node has a bias value, θ. You can think of this as a weight to an internal, constant 1.0 valued input. The activation is a summation of weighted inputs and the bias value. Let's refer to our node of interest as j, nodes in the preceding layer with values of i, and nodes in the succeeding layer with values of k. Service Technician Workbench Keygenguru. The activation of our node j is then: net j = ∑ i (o i × w ij) + θ j That is, the activation value for j is the sum of the products of output from a node i and the corresponding weight linking node i and j, plus the bias value.